SOA - Step by Step

There is no dearth of literature on SOA, concepts, practices, design etc. But surprisingly, there seems to be no step by step walk through of how the various SOA concepts can be incrementally evolved. This article is an attempt at the same.

For SOA novices, one of most easily understood concepts about SOA is the functional decomposition hierarchy, which is just a fancy name for the practice of top-down decomposition of a business process into services at the lowest level. So lets start with such a top down approach to defining services in an SOA.

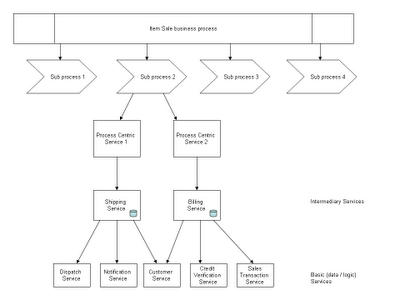

In a typical SOA, services are classified as

- Basic services - data centric services and logic centric services

- Composite / Intermdiary services

- Process centric services

In the approach of top down functional decomposition, the following is resorted to

- A functional business process is documented as a process definition in a process modelling language

- The high level process is further broken down into numerous sub-processes

- Each sub process is further decomposed into process-centric services

- Each process-centric service is further decomposed into composite services

- Each composite service into many basic data centric / logic centric services

The following diagram aptly depicts

We have so far identified services, is this what SOA is all about? How does the UI interact with the processes and services? How are the services deployed? Where does a service container fit in? Where and when does an ESB get used? All these questions seemingly orhogonal to the decomposition heirarchy, crop up in our minds.

Well, lets look at them in a logically incremental view....

To begin with lets consider we build services at basic, intermediary, process centric levels, as per SOA guidelines for designing and developing services. That is all our services are decoupled, cohesive etc. All these services are unit tested and faceless for the time being. All are implemented say as java classes which lie in one big codebase!

We already have a web application which acts as the primary UI client. In the simplest deployment scenario we will ship all our service classes to the WEB-INF/classes folder in the client's web application. In this case all the service interfaces are java based.

But are the services re-usable? Should another project come to us and ask us about re-using the billing service, can we say yes. Not right now. But lets improve our deployent units. Each intermediary service and its related classes can go into a seperate jar file. In the above exmaple we should have 2 jar files, shipping-services.jar and billing-services.jar. This way, we can put up, billing service as a resuable service, though only as a library. But re-using services in this fashion across the enterprise can be quite tedious. The service configuration needs to be explained through documents to other projects and every time there is an upgrade or a defect fix, you need to notify other projects which re-use your service. Projects maynot want such an integration at the API level. Instead re-usable would be much easier if you can expose your service to other projects through a variety of integration options.

A service can be exposed for integration using a variety of protocols and technologies

For example, our billing service can be exposed via HTTP as a simple JSP/servlet or a SOAP web service or a REST-ful webservice. Implementing this is quite trivial, all we need to do is wrap up our billing-services.jar in a war file, throw in third-party jars required for implementing the technology stack like SOAP-WS and we can easily host our billing service on a simple web application server. If we needed, to expose our service as EJBs, we can just as well wrap our war inside an EAR and host it on a EJB compliant application server.

Case for the light-weight Service container

If we are ready to forego the EJB interface option, we can choose thin, modular, service containers like Mule and service mix for hosting our billing-services.jar. Most service containers provide support for hosting services based on POJOs and also the support for common integration technologies like HTTP, SOAP, JMS, etc

Simpler service consumers with standard access to service

The projects intending to reuse our service, will act as service consumers and can have very simple, and standard, client code for accessing the service. For example to access a REST-ful webservice all that the service consumer will require is the ability to frame http requests.

In fact our own web application UI can act as a service consumer for the billing service hosted seperately in a service container like mule!

Which services to expose?

Though the billing service was a very good example of an independent service that can be re-used by other projects in the enterprise, often you will be faced with services, which you are tempted to expose, but which dont really have any takers. The reason is that not all intermediary services, make sense when used outside the context of a strong business process. What you might want to do in such a case is move, up the decomposition heirarchy and decide to expose the process-centric service if it makes business sense to expose the service. Finally only those services should be exposed which are being asked for...in order to emphasize the advantages of SOA, there is not need to expose services that are not being asked for, in the larger context of the enterprise service requirements

Case for an ESB

Our billing service being network addressable, no doubt, helps in making it readily re-usable across the enterprise, but there is still coupling between the service consumers and our service in terms of the location and also the interfaces, that our consumers are aware of. If we were to change the location of the service or any of its interfaces, this would impact our consumers.

An elegant way out is provided by the introduction of an ESB. ESB can act as an intermediary between our physical service and our service consumers. The consumers need be aware only of a logical end-point on the ESB, the ESB will resolve this end-point to the physical address of the service. ESB can also provide routing/load balancing should traffic to our service increase. More importantly, the interface between our ESB and the consumer can be abstract, with the transformation between the abstract ESB interface and the concrete service interface being provided declaratively through ESB configuration. An ESB can also provide features such as logging, QOS add-ons, service call audit and SLA monitoring, security, etc

Summary

No doubt, SOA needs to be seen in the light of architecture, service reuse at the enterprise level, but the implications of an SOA to a single simple web application architecture are important as well.Hopefully our walk through of applying SOA to a traditional web application has helped bridge the gap between lofty SOA principles and guidelines and the ground reality we face as developers every day :)